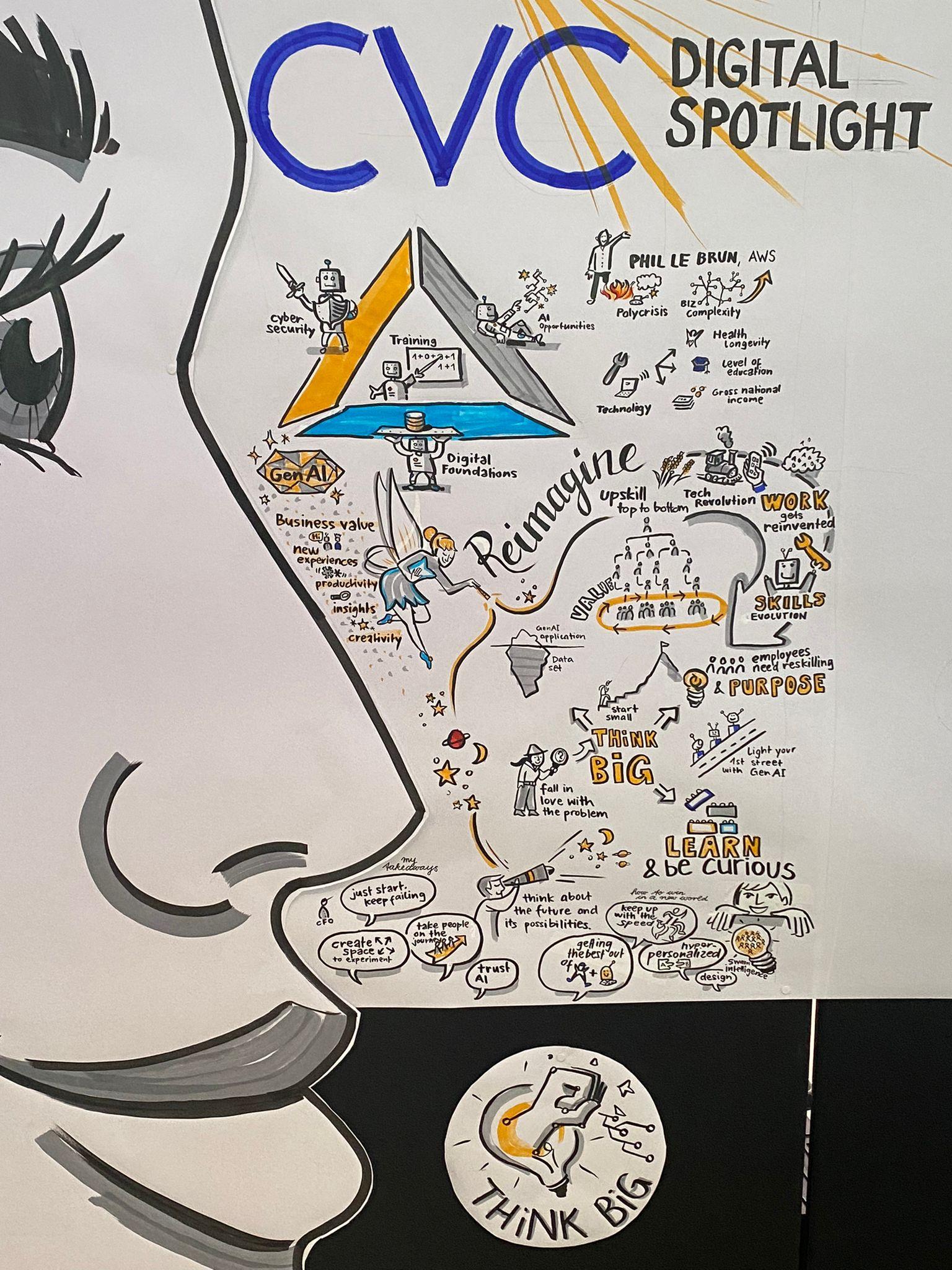

On March 19th, we had the opportunity to share our ideas and services during an exciting workshop event in London, powered by CVC. The workshop event “How do I manage cyber risk in AI deployment?” was focused on Generative AI and risk management, with the aim of acquire more knowledge about best practices and solutions from companies whose expertise are at the top of the filed.

Before digging into what we presented on stage – one of our most successful case study so far! – it is worth dedicate a minute to better understand how AI can be a truly enemy managing cyber risks.

Safeguarding Your AI Deployment: Understanding the Risks

Security, and beyond security “trustworthiness”, is a fundamental pillar of any AI strategy and especially for GenAI solutions’ adoption or development, since they’re built based on Large language models (LLMs) whose training methods and control on data processed are not in direct control of employees and developers.

The “A” in AI stands for “Artificial” but it could equally stand for “Augmented”, since on one side the business opportunities (e.g. marketing, customer care, business intelligence) unleashed by the level of proficiency and insight provided

by such solutions is without precedents and without alternatives; companies that are not willing to jump on this train will follow behind. On the other side, however, cyber security risks are tremendously augmented by solutions that, without proper governance and control, could escape from Organization’s control.

An application that is not secure is an application that we don’t trust, and even if trust has always been a business objective it has now become vital with AI solutions, whose level of language proficiency and output resembles the one of humans: studies show that once knowing that an output such as a statement could be produced by AI, people distrust any output as being genuinely human; you can imagine the potential impact of a mistrustful customer for a company without an unclear AI strategy and governance.

So what measures could be implemented to reduce the potential risks associated with AI?

Navigating AI Risks: our Framework for Success

The security measures that BIP CyberSec recommends are related with the level of AI adoption that has been selected by the company, and the level of AI adoption should result from an AI Governance Framework that identified the maturity target level and the existing AI use cases. Our suggestion is to rely and adapt AI frameworks that already exist, such as the NIST AI Risk Management Framework, that unsurprisingly suggest to at first define a solid Governance, then Map the existing risks before Measuring and Managing them (these are indeed the 4 NIST “Functions”).

In line with business objectives and the AS-IS AI maturity, the Organization could face scenarios that range from the simple access to existing AI models (e.g. via external APIs), integration with LLM models (with different level of fine-tuning) or training of new models (e.g. accessing existing foundation models such as LLaMA).

- If your Organization is using an external API, measures such as access control and data protection shall be in place, for example requiring users to log in and confirm before sending potentially sensitive information. Integrate your service provider in your Third party risk management processes and ask your provider to share what processes and technologies are in place in support of transparency, fairness, security and explainability.

- If your Organization is integrating its solutions with existing LLM models, consider security controls to be in place for the entire AI lifecycle:

- At Development stage, where AI-related threats training should be provided to developers, and controls such as model cards and data cards could minimize the lack of accuracy and transparency.

- At Deployment stage, where every new release of AI solution is subject to specific AI threat modelling and Red Teaming (e.g. using dedicated frameworks such as the MITRE ATLAS and solutions such as Microsoft Counterfeit and MITRE Arsenal)

- At Operations stage, where we start seeing solutions for MLOps and MLDR, that are capable of identifying suspicious behavior (e.g. prompt injection, data poisoning, model stealing).

BIP CyberSec’s Support in Ensuring AI Assurance

In conclusion, while Security has always been important for any business model, is now even more “fundamental” for any Organization that is willing to pursue an AI journey since it’s inevitably intertwined with “trustworthiness”. According to a MITRE survey conducted on 2023: “Only 39% of U.S. adults indicate that they believe today’s AI technologies are safe and secure, […] and most say they’re more concerned than excited about the technology. […] 85% want industry to transparently share AI assurance practices before bringing products equipped with AI technology to market”.

BIP CyberSec can support this AI assurance process in several ways:

- By establishing an AI Governance Framework that supports the senior management in identifying the existing AI use cases top-down (instead of lagging behind projects that stem bottom-up).

- By performing an AI Risk Assessment that considers Impacts on individuals and society (as also requested by ISO 42001 and EU AI Act), thus providing a set of controls in line with risks that can also be exported as value to customers (also in terms of compliance with regulations).

- By protecting customer data with ethical hacking exercises (e.g. Red Teaming) that are updated to cope with new AI threats (e.g. prompt injection, data poisoning, model stealing).

Do you want to know more about how can we support the protection of your business? Contact us!